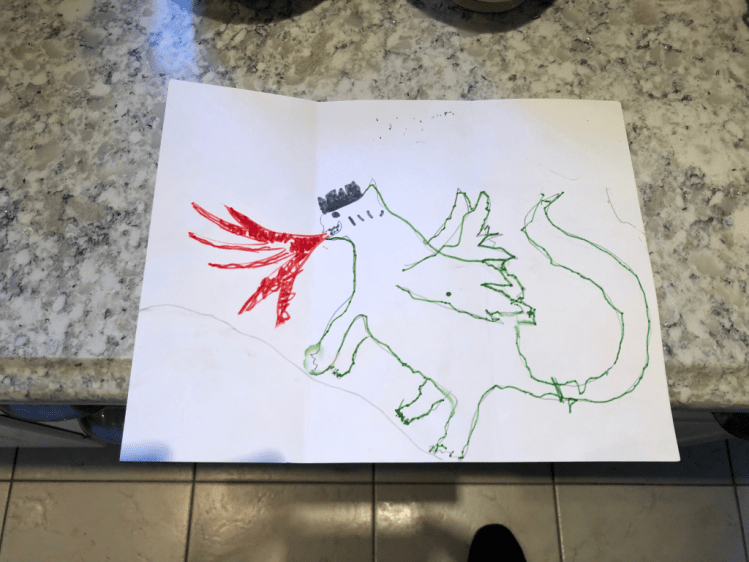

I recently went up to visit my Mom for her birthday and while up there came across this pretty cute birthday card on the mantle:

The author of this amusing well wish is my mom’s latest ward, 5 yr old Peter from up the block. This is a pretty bad ass dragon if you ask me! 5 year old me would nod with silent approval.

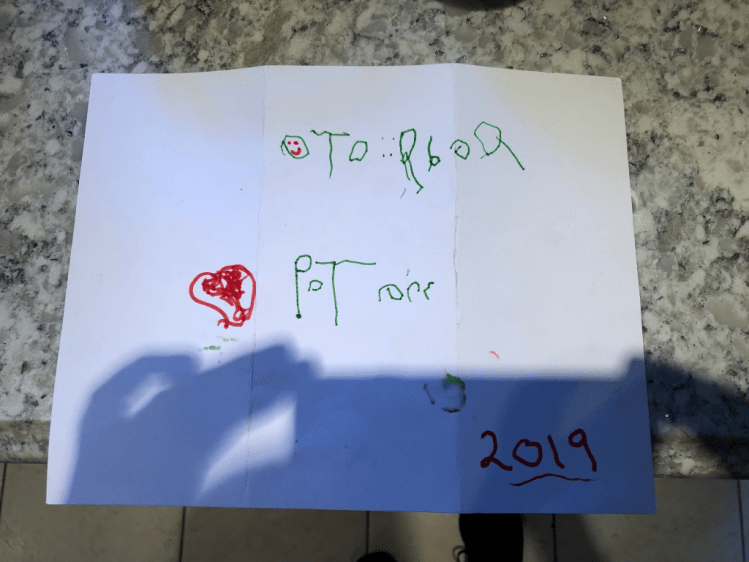

It’s fun because you have to puzzle out the letters a bit. Some are pretty near the mark like the T and O but a few other such as the various e’s are more than a little obscure. To an outsider they might be illegible but being in possession of a brain and some context, not all together impossible to discern. My mother’s name is Rhea, it’s her birthday, therefore the meaning of the young author’s scribbles is “To Rhea, Peter”.

This got me to thinking: What would a machine make of this birthday card? Put more provocatively: Can a neural net read a 5 year old’s handwriting?

Well, we don’t have to guess the answer to this question, we can try it.

The neural net we’ll use is the same one that’s all the rage these days: A convolutional neural net with several hidden layers. You’ve probably heard them go by the name of “deep learning” and other fancy buzzwords. More or less the same sort of nets that power the various machine learning implementations you’re familiar with like Alexa, Facebooks image classification and presumably what’s behind Tesla’s self driving cars.

We’ll use pytorch as our library which is essentially a machine learning library written in python and developed by Facebook.

A fair amount of massaging the data was required but the reader versed in machine learning will know that that’s most of the work. The code here took barley a hlf hr to cobble together. The image manipulation on the other hand took a few hrs (I probably could of sped that up by writing a script to do these operations but then I would have had to figure that out which would’ve took who knows how long. The dilemmas of the lazy man!).

Here’s an example of the processed data:

But isn’t that cheating?! No, not really, I’m working around the difference in conditions between the birthday card and the training data set: EMNIST. The data set is in black and white, the birthday card, green text on white background.

So, now on to the experiment: Using a net with 2 convolutional layers, 2 fully connected layers, max pooling and 4 epochs (number of times you run over the training data), here are the results.

Actual message:

TO RHEA

PETER

Machine read:

TO RHOA

PPTOR

At first glance this might not seem too good, but it definitely got a good portion of the message correct. 8/11 characters correct, or about 73%. If this was a test to pass the first grade we’d make the cut. Also if you consider it from the perspective of chance we can definitely see that out net is working to some extent.

There’s 26 possible characters (letters in the alphabet) and 11 letters in the message. So if our program was just guessing the letters, the chances that it would correctly guess all letters is an astronomical 1/3,670,344,486,987,776 chance. Looking at it from the inverse: What are the chances that it would correctly guess 8/11 characters?

1/208,827,064,576 or about a 1 in 200 Billion chance.

So with that in mind we might not thumb up our nose at the little program so lightly.

The astute reader will realize that this is a very bad experiment scientifically: The writers which contributed to the EMNIST data set were all adults and given specific paper and marker to write with. Those initial conditions are totally different than our young author: green crayola marker and construction paper. None the less I think the experiment is telling.

Perhaps more interesting than evaluating the machine: we can evaluate the kid. Technically minded readers will notice this as what’s termed a reverse turing test. Given an individuals actions how sure are we that they are in fact a human and not a machine.

In our case it would be more like: How close is our kid to being an adult in terms of handwriting. If he’s basically writing at an adult level we would expect our program to easily read his message 100% accurately or very near to. Well based on the test, I’d say pretty darn close. He’s got a few kinks to work out but our young author is well on his way.

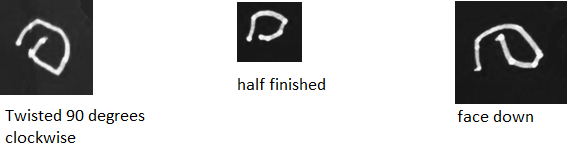

Like the handedness of his ‘e’s’:

Face down e’s, inverted e’s and half finished e’s. This is interesting because it’s a trend. Although I have no 5 year old, I’ve seen enough examples of their writing to notice that they tend to have trouble with handedness. Backwards P’s, b’s and the like are common in young children. There’s something interesting there but it’s hard to say what. The same issues don’t seem to plague letters like T and A which are symmetrical and to which the writer need not pay attention to handedness.